Rotem-Gal-Oz, A. (2005). Fallacies of Distributed Computing Explained.

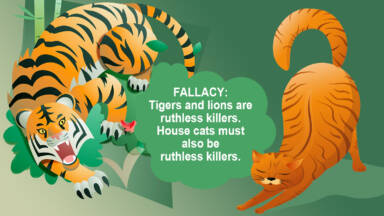

Cognitive biases (built-in patterns of thinking) and fallacies (errors in thoughts) creep into our every day lives, sometimes with us not even knowing it. For example, ever wonder why you work just a little harder, a little quicker, when you think someone is standing over your shoulder, watching you? This is known as the Hawthorne effect: people tend to change their behaviors when they know (or think) they are being watched.

Or how about situations when we are trying to solve a problem but reach for the same tool (i.e. using a hammer)? That might be the exposure effect, people preferring the same tools, processes — just because they are familiar.

Essentially, cognitive biases and fallacies trick us: they throw off our memory, perception, and rationality. So we must surface these blind spots to the forefront of our brains when designing and building distributed systems.

8 Fallacies

According to Schneider, there are 8 fallacies that bite distributed system designers. These fallacies stood the test of time. They were drafted over 25 years ago; designers have been building distributed systems for over 55 years. Despite advances in technology, these fallacies still remain true today.

1. The network is reliable

2. Latency is zero

3. Bandwidth is infinite

4. The network is secure

5. Topology doesn’t change

6. There is one administrator

7. Transport cost is zero

8. The network is homogeneous

Notice anything in common among these fallacies? They all tend to revolve around assumptions we make about the underlying communication, the network.

Network assumptions

I wouldn’t go as far and networks are fragile, but failures (e.g. hardware failure, power outages, faulty cable) happen on a daily basis. Sometimes, these failures are not observed by our users, their traffic being automatically routing towards an alternative — albeit sometimes a little slower — path; their dropped messages being resent thanks to the underlying (transport) communication protocol.

So, what does this mean for you, the system designer?

To overcome network hiccups, we should either “use a communication medium that supplies full reliable message” or employ the following messaging techniques: retry, acknowledge important messages, identify/ignore duplicates, reorder messages (or do not depend on message order), and very message integrity.

In addition to hardening our software, be prepared to thoroughly stress test your system. You’ll want to hamper network performance: drop messages, increase latency, congest the pipes. How well can your system tolerate these conditions? Can your system sustain these performance degradations? Or does your system fail in unexpected ways?

Main takeaway

In short, we should assume that the failure is unreliable and in response we should both employ techniques (e.g. retry messages) that harden our system as well as thoroughly test our systems/software under non-ideal conditions including device failures, congestion, increased latency.

References

1. Hunt Pragmatic Thinking and Learning: Refactor your wetware

2. https://fallacyinlogic.com/fallacy-vs-bias/