Notes below are from my first study session at 05:00 AM, before I hit the rest of my morning routine: walking the dogs at the local park, blending a delicious fruit smoothy (see my blog post on best vegan smoothie), then jumping behind the desk for hours for work. In the evening, I detoured from watching lectures and instead, focused on working on the pre-lab assignment (write up on this in another post)

Summary

Rest of the video lectures wraps up the memory system, discussing how virtual memory and its indirection technique offers a larger address space, protection between processes and sharing between process. For this to work, we need a page table, a data structure mapping virtual page numbers to physical frame numbers. Within this table are page table entries, each entry containing metadata that tracks things such as whether the memory is valid or readable or executable and so on. Finally, we can speed up the entire memory look up process by using a virtually indexed, physically tagged cache, allowing us check the TLB and Cache in parallel.

Address Translation

Summary

We employ a common CS trick: indirection. This technique promotes three benefits: large address space, protection between processes, sharing between processes. Each process has its own page table)

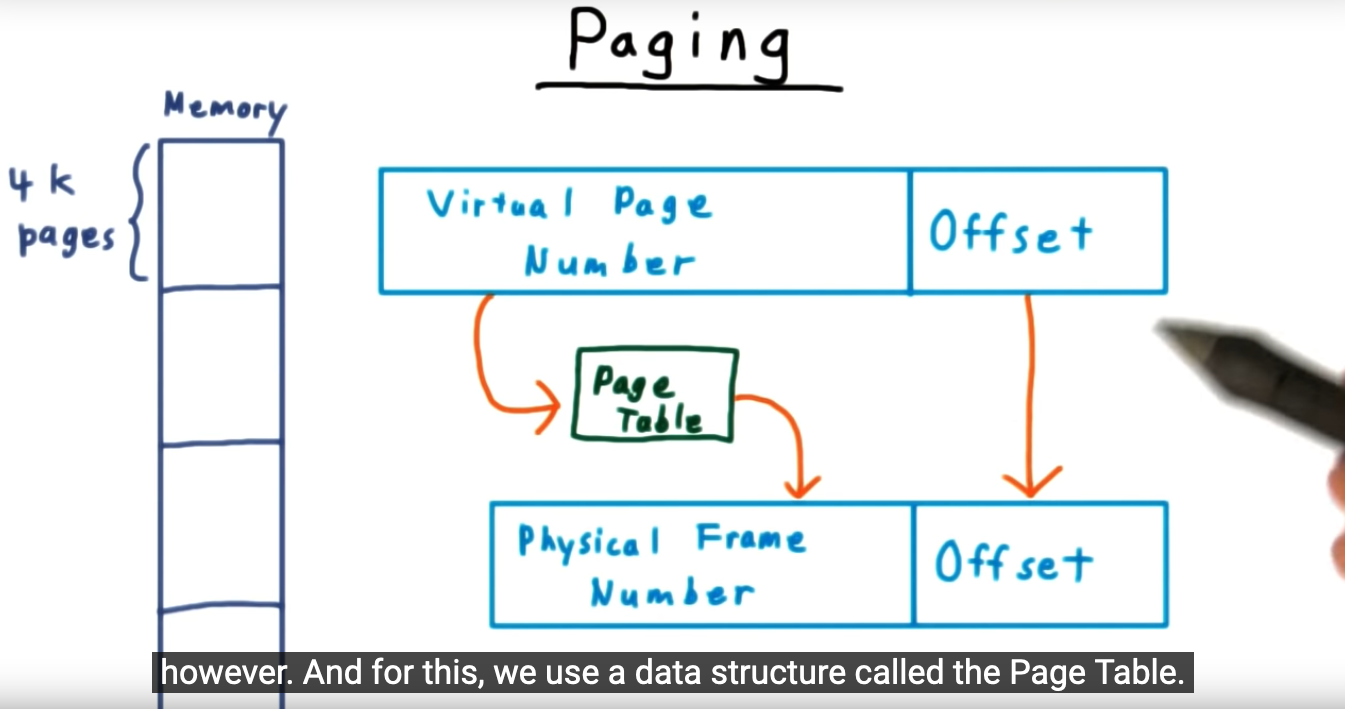

Paging

Summary

Because we can have more virtual memory than physical (thanks to indirection, above), we need a mechanism to translate between the two. This mechanism is page translation table. And although the VPN and PFN, virtual private number and physical frame number respectively, can differ, the offsets into the page itself will always be the same)

Page Table Implementation

Summary

To map a virtual private number to physical private number, we need a page table. If we start with a single array, it will be huge. Take the page size, covert this to bits, subtract page size (in bits) from virtual address number (in bits). Take that number in bits, multiply it times the size of the PTE, page table entry, and you get your page table. Huge. Instead, using multiple tables to reduce size, at the cost of lookup time

Accelerating address translation

Summary

To avoid hitting memory when looking up the page table, architects added a translation look aside buffer. But it’s not free. I Learned that with a TLB, there is a problem of using virtual page numbers: how do we tell if that virtual page is valid for a process? We can tackle this by either 1) flushing the TLB during a context switch or 2) adding some unique identifier to the TLB entry)

Page Table Entries

Summary

Take the number of bits of the physical address, subtract the page size (convert to bits), that that gives us some remaining bits to play with: metadata. Inside of this location, we can store access control, valid/present (again: reminding me of flickers of HPCA code), dirty, control caching)

Page Fault

Summary

If a process requests (or reads) an address that is not assigned a physical address, a page fault will occur (not a big deal), and the page fault handler will run. This handler will start by checking the free-list (reminds me of computing systems from a programmer’s perspective) and if address is available, then use the address, then restart the process. But if no page is available, need to evict, then start up again)

Virtually Indexed, Physically Tagged Caches

Summary

optimization the TLB and cache lookup, from sequential to parallel)

Quiz

Summary

When calculating the max number of entries in the cache, for a virtually indexed, physically tagged cache, we need not worry about the virtual or physical address size. Just need to think about the page size and size of cache blocks)